Get Track's Audio Analysis

Get a low-level audio analysis for a track in the Spotify catalog. The audio analysis describes the track’s structure and musical content, including rhythm, pitch, and timbre.

Request

- idstringRequired

The Spotify ID for the track.

Example:11dFghVXANMlKmJXsNCbNl

Response

Audio analysis for one track

- analyzer_versionstring

The version of the Analyzer used to analyze this track.

Example:"4.0.0" - platformstring

The platform used to read the track's audio data.

Example:"Linux" - detailed_statusstring

A detailed status code for this track. If analysis data is missing, this code may explain why.

Example:"OK" - status_codeinteger

The return code of the analyzer process. 0 if successful, 1 if any errors occurred.

Example:0 - timestampinteger

The Unix timestamp (in seconds) at which this track was analyzed.

Example:1495193577 - analysis_timenumber

The amount of time taken to analyze this track.

Example:6.93906 - input_processstring

The method used to read the track's audio data.

Example:"libvorbisfile L+R 44100->22050"

- num_samplesinteger

The exact number of audio samples analyzed from this track. See also

analysis_sample_rate.Example:4585515 - durationnumber

Length of the track in seconds.

Example:207.95985 - sample_md5string

This field will always contain the empty string.

- offset_secondsinteger

An offset to the start of the region of the track that was analyzed. (As the entire track is analyzed, this should always be 0.)

Example:0 - window_secondsinteger

The length of the region of the track was analyzed, if a subset of the track was analyzed. (As the entire track is analyzed, this should always be 0.)

Example:0 - analysis_sample_rateinteger

The sample rate used to decode and analyze this track. May differ from the actual sample rate of this track available on Spotify.

Example:22050 - analysis_channelsinteger

The number of channels used for analysis. If 1, all channels are summed together to mono before analysis.

Example:1 - end_of_fade_innumber

The time, in seconds, at which the track's fade-in period ends. If the track has no fade-in, this will be 0.0.

Example:0 - start_of_fade_outnumber

The time, in seconds, at which the track's fade-out period starts. If the track has no fade-out, this should match the track's length.

Example:201.13705 - loudnessnumber [float]

The overall loudness of a track in decibels (dB). Loudness values are averaged across the entire track and are useful for comparing relative loudness of tracks. Loudness is the quality of a sound that is the primary psychological correlate of physical strength (amplitude). Values typically range between -60 and 0 db.

Example:-5.883 - temponumber [float]

The overall estimated tempo of a track in beats per minute (BPM). In musical terminology, tempo is the speed or pace of a given piece and derives directly from the average beat duration.

Example:118.211 - tempo_confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the

tempo.Range:0-1Example:0.73 - time_signatureinteger

An estimated time signature. The time signature (meter) is a notational convention to specify how many beats are in each bar (or measure). The time signature ranges from 3 to 7 indicating time signatures of "3/4", to "7/4".

Range:3-7Example:4 - time_signature_confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the

time_signature.Range:0-1Example:0.994 - keyinteger

The key the track is in. Integers map to pitches using standard Pitch Class notation. E.g. 0 = C, 1 = C♯/D♭, 2 = D, and so on. If no key was detected, the value is -1.

Range:-1-11Example:9 - key_confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the

key.Range:0-1Example:0.408 - modeinteger

Mode indicates the modality (major or minor) of a track, the type of scale from which its melodic content is derived. Major is represented by 1 and minor is 0.

Example:0 - mode_confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the

mode.Range:0-1Example:0.485 - codestringstring

An Echo Nest Musical Fingerprint (ENMFP) codestring for this track.

- code_versionnumber

A version number for the Echo Nest Musical Fingerprint format used in the codestring field.

Example:3.15 - echoprintstringstring

An EchoPrint codestring for this track.

- echoprint_versionnumber

A version number for the EchoPrint format used in the echoprintstring field.

Example:4.15 - synchstringstring

A Synchstring for this track.

- synch_versionnumber

A version number for the Synchstring used in the synchstring field.

Example:1 - rhythmstringstring

A Rhythmstring for this track. The format of this string is similar to the Synchstring.

- rhythm_versionnumber

A version number for the Rhythmstring used in the rhythmstring field.

Example:1

The time intervals of the bars throughout the track. A bar (or measure) is a segment of time defined as a given number of beats.

- startnumber

The starting point (in seconds) of the time interval.

Example:0.49567 - durationnumber

The duration (in seconds) of the time interval.

Example:2.18749 - confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the interval.

Range:0-1Example:0.925

The time intervals of beats throughout the track. A beat is the basic time unit of a piece of music; for example, each tick of a metronome. Beats are typically multiples of tatums.

- startnumber

The starting point (in seconds) of the time interval.

Example:0.49567 - durationnumber

The duration (in seconds) of the time interval.

Example:2.18749 - confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the interval.

Range:0-1Example:0.925

Sections are defined by large variations in rhythm or timbre, e.g. chorus, verse, bridge, guitar solo, etc. Each section contains its own descriptions of tempo, key, mode, time_signature, and loudness.

- startnumber

The starting point (in seconds) of the section.

Example:0 - durationnumber

The duration (in seconds) of the section.

Example:6.97092 - confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the section's "designation".

Range:0-1Example:1 - loudnessnumber

The overall loudness of the section in decibels (dB). Loudness values are useful for comparing relative loudness of sections within tracks.

Example:-14.938 - temponumber

The overall estimated tempo of the section in beats per minute (BPM). In musical terminology, tempo is the speed or pace of a given piece and derives directly from the average beat duration.

Example:113.178 - tempo_confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the tempo. Some tracks contain tempo changes or sounds which don't contain tempo (like pure speech) which would correspond to a low value in this field.

Range:0-1Example:0.647 - keyinteger

The estimated overall key of the section. The values in this field ranging from 0 to 11 mapping to pitches using standard Pitch Class notation (E.g. 0 = C, 1 = C♯/D♭, 2 = D, and so on). If no key was detected, the value is -1.

Example:9 - key_confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the key. Songs with many key changes may correspond to low values in this field.

Range:0-1Example:0.297 - modenumber

Indicates the modality (major or minor) of a section, the type of scale from which its melodic content is derived. This field will contain a 0 for "minor", a 1 for "major", or a -1 for no result. Note that the major key (e.g. C major) could more likely be confused with the minor key at 3 semitones lower (e.g. A minor) as both keys carry the same pitches.

- mode_confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the

mode.Range:0-1Example:0.471 - time_signatureinteger

An estimated time signature. The time signature (meter) is a notational convention to specify how many beats are in each bar (or measure). The time signature ranges from 3 to 7 indicating time signatures of "3/4", to "7/4".

Range:3-7Example:4 - time_signature_confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the

time_signature. Sections with time signature changes may correspond to low values in this field.Range:0-1Example:1

Each segment contains a roughly conisistent sound throughout its duration.

- startnumber

The starting point (in seconds) of the segment.

Example:0.70154 - durationnumber

The duration (in seconds) of the segment.

Example:0.19891 - confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the segmentation. Segments of the song which are difficult to logically segment (e.g: noise) may correspond to low values in this field.

Range:0-1Example:0.435 - loudness_startnumber

The onset loudness of the segment in decibels (dB). Combined with

loudness_maxandloudness_max_time, these components can be used to describe the "attack" of the segment.Example:-23.053 - loudness_maxnumber

The peak loudness of the segment in decibels (dB). Combined with

loudness_startandloudness_max_time, these components can be used to describe the "attack" of the segment.Example:-14.25 - loudness_max_timenumber

The segment-relative offset of the segment peak loudness in seconds. Combined with

loudness_startandloudness_max, these components can be used to desctibe the "attack" of the segment.Example:0.07305 - loudness_endnumber

The offset loudness of the segment in decibels (dB). This value should be equivalent to the loudness_start of the following segment.

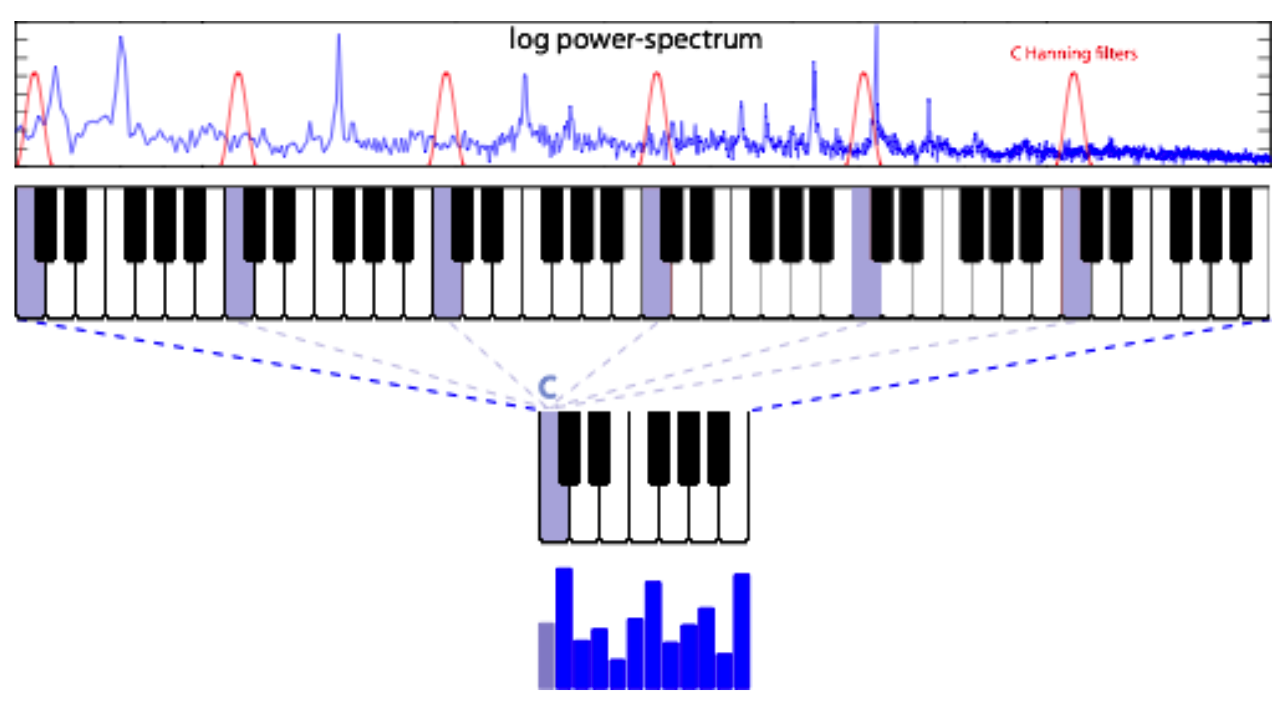

Example:0 - pitchesarray of numbers

Pitch content is given by a “chroma” vector, corresponding to the 12 pitch classes C, C#, D to B, with values ranging from 0 to 1 that describe the relative dominance of every pitch in the chromatic scale. For example a C Major chord would likely be represented by large values of C, E and G (i.e. classes 0, 4, and 7).

Vectors are normalized to 1 by their strongest dimension, therefore noisy sounds are likely represented by values that are all close to 1, while pure tones are described by one value at 1 (the pitch) and others near 0. As can be seen below, the 12 vector indices are a combination of low-power spectrum values at their respective pitch frequencies.

Example:

Example:[0.212,0.141,0.294]Range:0-1 - timbrearray of numbers

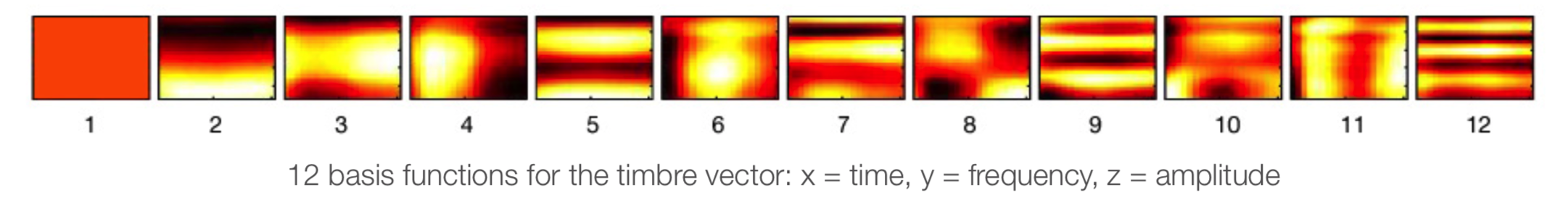

Timbre is the quality of a musical note or sound that distinguishes different types of musical instruments, or voices. It is a complex notion also referred to as sound color, texture, or tone quality, and is derived from the shape of a segment’s spectro-temporal surface, independently of pitch and loudness. The timbre feature is a vector that includes 12 unbounded values roughly centered around 0. Those values are high level abstractions of the spectral surface, ordered by degree of importance.

For completeness however, the first dimension represents the average loudness of the segment; second emphasizes brightness; third is more closely correlated to the flatness of a sound; fourth to sounds with a stronger attack; etc. See an image below representing the 12 basis functions (i.e. template segments).

The actual timbre of the segment is best described as a linear combination of these 12 basis functions weighted by the coefficient values: timbre = c1 x b1 + c2 x b2 + ... + c12 x b12, where c1 to c12 represent the 12 coefficients and b1 to b12 the 12 basis functions as displayed below. Timbre vectors are best used in comparison with each other.

Example:[42.115,64.373,-0.233]

A tatum represents the lowest regular pulse train that a listener intuitively infers from the timing of perceived musical events (segments).

- startnumber

The starting point (in seconds) of the time interval.

Example:0.49567 - durationnumber

The duration (in seconds) of the time interval.

Example:2.18749 - confidencenumber

The confidence, from 0.0 to 1.0, of the reliability of the interval.

Range:0-1Example:0.925

Response sample

{ "meta": { "analyzer_version": "4.0.0", "platform": "Linux", "detailed_status": "OK", "status_code": 0, "timestamp": 1495193577, "analysis_time": 6.93906, "input_process": "libvorbisfile L+R 44100->22050" }, "track": { "num_samples": 4585515, "duration": 207.95985, "sample_md5": "string", "offset_seconds": 0, "window_seconds": 0, "analysis_sample_rate": 22050, "analysis_channels": 1, "end_of_fade_in": 0, "start_of_fade_out": 201.13705, "loudness": -5.883, "tempo": 118.211, "tempo_confidence": 0.73, "time_signature": 4, "time_signature_confidence": 0.994, "key": 9, "key_confidence": 0.408, "mode": 0, "mode_confidence": 0.485, "codestring": "string", "code_version": 3.15, "echoprintstring": "string", "echoprint_version": 4.15, "synchstring": "string", "synch_version": 1, "rhythmstring": "string", "rhythm_version": 1 }, "bars": [ { "start": 0.49567, "duration": 2.18749, "confidence": 0.925 } ], "beats": [ { "start": 0.49567, "duration": 2.18749, "confidence": 0.925 } ], "sections": [ { "start": 0, "duration": 6.97092, "confidence": 1, "loudness": -14.938, "tempo": 113.178, "tempo_confidence": 0.647, "key": 9, "key_confidence": 0.297, "mode": -1, "mode_confidence": 0.471, "time_signature": 4, "time_signature_confidence": 1 } ], "segments": [ { "start": 0.70154, "duration": 0.19891, "confidence": 0.435, "loudness_start": -23.053, "loudness_max": -14.25, "loudness_max_time": 0.07305, "loudness_end": 0, "pitches": [0.212, 0.141, 0.294], "timbre": [42.115, 64.373, -0.233] } ], "tatums": [ { "start": 0.49567, "duration": 2.18749, "confidence": 0.925 } ]}